When we began architecting DevSecOps at Evanke, our goal was straightforward yet ambitious: establish an AI development environment that integrates security at every stage without sacrificing scalability or performance. Our approach had to go beyond standard DevOps practices, embedding security deeply into the lifecycle and infrastructure itself, and making sure the entire pipeline—from development through deployment—is both efficient and secure. Here’s how we built it from the ground up, integrating lessons learned and best practices that have become foundational to Evanke’s DevSecOps strategy.

Core Principles Behind Evanke’s DevSecOps Architecture:

- Infrastructure Automation (InfraOps)

- CI/CD Pipelines

- DataOps and MLOps Integration

- Security-by-Design

- Monitoring and Operational Excellence

Let me explain the principles:

1. Infrastructure Automation (InfraOps)

Our infrastructure is fully managed through Infrastructure-as-Code (IaC), primarily leveraging Terraform for provisioning across AWS, Azure, and GCP environments. This ensures our deployments are repeatable, scalable, and secure by default. Terraform scripts provision the necessary cloud resources, manage network segmentation, and enforce strict firewall policies. We’ve also integrated Prometheus and Grafana to monitor system health and performance continuously, allowing proactive detection and resolution of infrastructure issues.

2. CI/CD Pipelines for AI Workflows

Early on, we recognized the necessity for a robust continuous integration and continuous deployment process. Our DevSecOps pipelines utilize Jenkins, Docker, and Ansible for end-to-end automation:

- Docker ensures consistent environments across development, testing, and production.

- Jenkins automates builds and tests at every commit, providing immediate feedback to developers.

- Ansible automates deployment to frontend and backend containers, reducing manual interventions and ensuring consistency.

We experimented with advanced deployment tools such as ArgoCD for k8s deployments and service mesh technologies like Istio. While Argo was a great choice for our case, Istio introduced unnecessary complexity even though Istio provided powerful capabilities for traffic routing, and observability within Kubernetes clusters, The added overhead of managing Istio outweighed their benefits, leading us to conclude that sticking with simpler, foundational tools would be a preferrable choice. That being said, we still have the other environment with Istio setup for experimentation 🙂

3. Integrating DataOps and MLOps:

Recognizing data as a critical asset, we structured DataOps to streamline data management. We employ Vector Databases to support retrieval-augmented generation (RAG), Document Databases for our prompt libraries, and relational databases for tracking application metrics. This comprehensive approach ensures high-quality, consistent data feeds for our ML models.

Our MLOps layer, powered by MLflow, automates the management of ML model lifecycles. We’ve adopted structured practices, including automated dataset splitting, performance tracking, and model versioning, ensuring our AI models are not only accurate but reliably deployed and easy to update.

3. Embedding Security at Every Level (Security-by-Design):

Security isn’t an afterthought at Evanke; it’s integrated into every step of our development process. To achieve this:

- Our cloud infrastructure leverages private Virtual Private Clouds (VPCs) and isolated private Kubernetes clusters, limiting attack surfaces and controlling access points.

- Bastion hosts provide controlled, audited access to sensitive resources, and strict firewall configurations ensure secure internal communication.

- Regular security assessments, vulnerability scanning, and compliance checks are automated within our CI/CD workflows.

4. Collaborative Development with Defined Roles

Our DevSecOps teams comprise cross-functional roles including Data Scientists, ML Engineers, Software Developers, Site Reliability Engineers (SREs), Data Engineers, and Cloud Administrators. Clearly defined roles, responsibilities, and operational standards ensure efficient collaboration and accountability. For example, data scientists and ML engineers closely collaborate during the model training process, while SREs and Cloud Administrators oversee the production environment.

5. Advanced Monitoring and Operational Metrics

To maintain high availability and performance, Evanke utilizes comprehensive monitoring and alerting:

- Prometheus captures real-time infrastructure metrics.

- Grafana dashboards visualize operational health, helping teams quickly identify bottlenecks or performance anomalies.

- Operational and model metrics are stored in relational databases, making insights available for continuous performance tuning and optimization decisions.

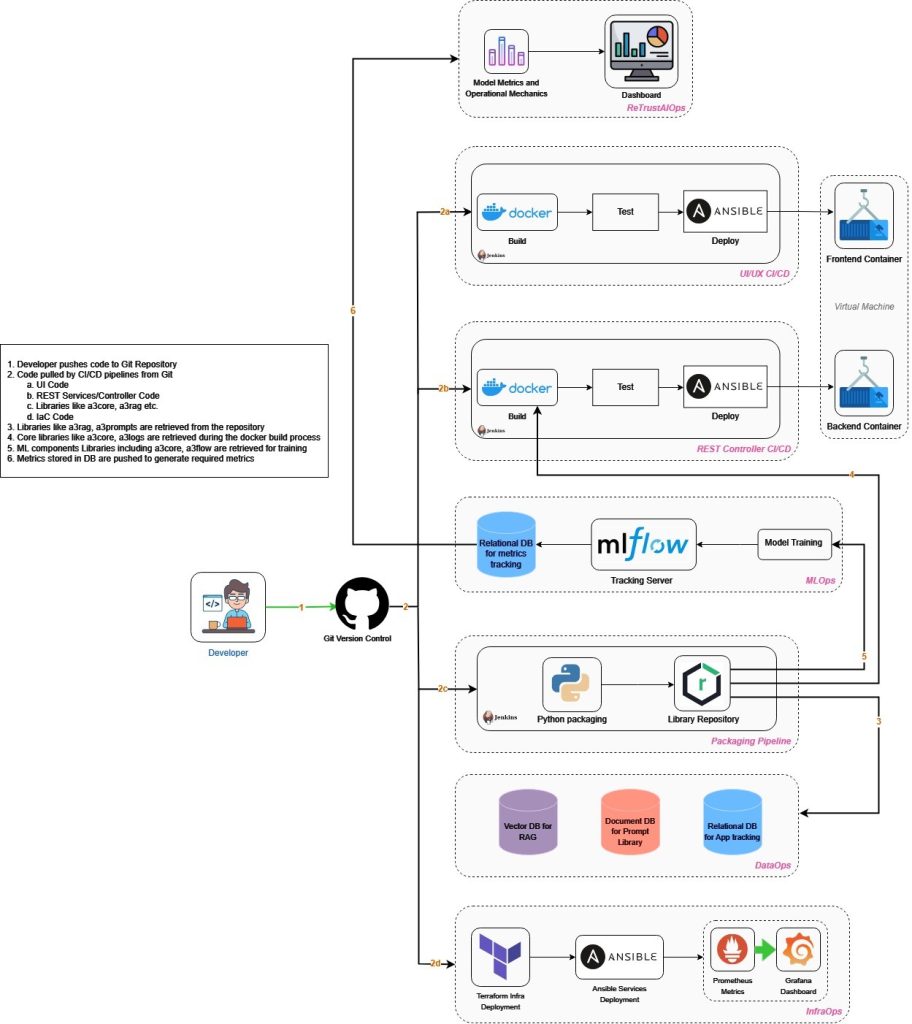

An overview of the workflow

- The infra is built using IaC tools like Terraform and services are configured using Configuration Management tools such as Ansible. This is the foundational layer of the system.

- Developers push code to a Git version control repository.

- Jenkins-based CI/CD pipelines automatically retrieve the code for UI/UX, REST services, and library management.

- Core libraries and ML components (e.g., a3core, a3flow) are fetched during the Docker build process.

- Docker containerization provides consistent environments across deployments.

- Ansible automates the deployment of frontend and backend containers.

- MLflow concurrently manages model training workflows, tracking and storing performance metrics in a relational database.

- Dashboards visualize operational metrics, offering clear insights into model performance and system efficiency.

Continuous Improvement and Collaboration

Building Evanke’s DevSecOps environment has been a challenging yet immensely rewarding journey. We have tried to confidently leverage a robust, secure infrastructure to deliver cutting-edge AI solutions and that has validated every decision and lesson learned along the way. For anyone embarking on this path, remember that while the technology and tools are essential, it’s the commitment, collaboration, and adaptability of the people involved that truly drive success. Keep innovating, keep improving, and trust in the power of teamwork—together, you’ll create solutions that exceed expectations.